Introduction

Focaloid Technologies, a leader in digital transformation and software engineering, specializes in leveraging Large Language Models LLMs) to create cutting-edge enterprise knowledge assistants. This white paper explores how Focaloid Technologies’ expertise can be used to build intelligent knowledge assistants that augment and accelerate knowledge transfer across your organization.

The transfer of tribal knowledge – the tacit skills, insights, and best practices that organically develop within teams – is a cornerstone of organizational learning and growth. Successful knowledge transfer empowers employees to make informed decisions, collaborate effectively, and drive innovation. Traditional methods often prove inefficient and limit the accessibility of valuable expertise.

The Challenge

Accelerating Knowledge Transfer

Effective knowledge transfer faces several obstacles:

- Time-Consuming Onboarding – New team members rely on senior colleagues for guidance, slowing down their integration.

- Siloed Knowledge – Valuable expertise often resides with specific individuals or teams, limiting accessibility for others.

- Loss of Expertise – Institutional knowledge can be lost when experienced employees retire or move on.

- Information Overload – The sheer volume of data makes it difficult to find relevant insights quickly.

The Solution

LLM Powered Knowledge Assistants

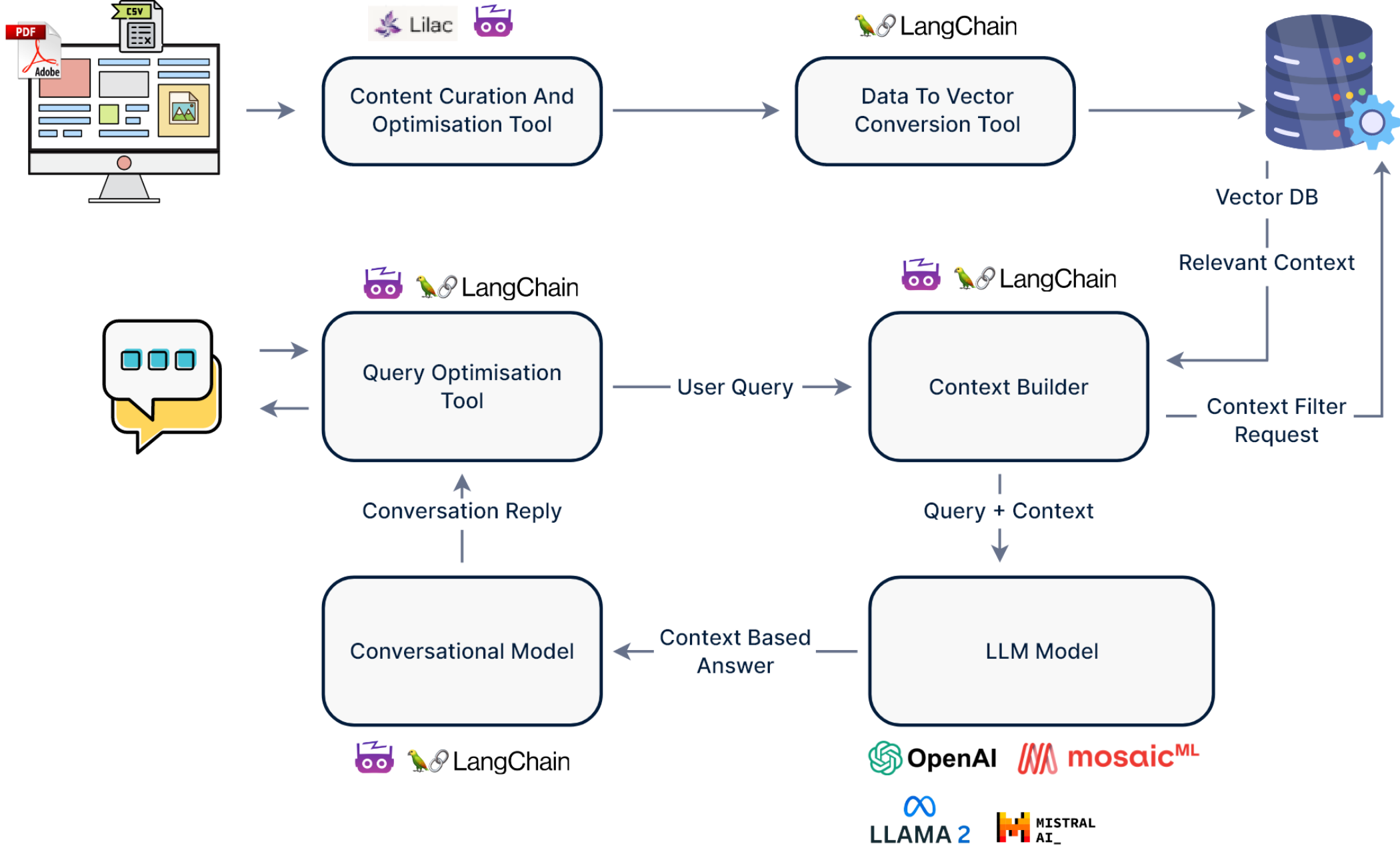

Focaloid Technologies builds knowledge assistants powered by LLMs and a robust technical architecture to address these challenges. Here’s how it works:

-

Data Aggregation and Preparation

Comprehensive Data Sourcing – We connect the knowledge assistant to your existing repositories, including document stores, CRMs,code repositories, project archives, internal communication channels, and knowledge bases.

Content Curation – Our team collaborates with you to identify and prioritize the most valuable knowledge sources within your organization. This may include internal wikis, project documentation, past presentations, and FAQs.

Multimodal Integration Optional) – Where applicable, we incorporate images, diagrams, and videos to enrich the knowledge base and enable visual searches.

-

Data Pre-processing for LLM Optimization

Cleaning and Normalization – We ensure data consistency, remove redundancies, and structure the data in a format optimized for LLM training.

Transformation and Enrichment – Raw data may be transformed, and metadata extracted to enhance the LLM’s understanding of your organization’s specific context.

-

LLM Training and Fine-tuning

Base LLM Selection – The appropriate LLM is chosen based on the nature of your data and desired functionality (e.g., OpenAI’s GPT 3).

Domain-Specific Fine-tuning – We fine-tune the LLM on your curated knowledge base to ensure accurate responses that reflect your company’s specific practices and terminology.

-

Knowledge Graph Integration Optional)

Construction – A knowledge graph is constructed to represent relationships between entities within your enterprise data, further improving the assistant’s contextual understanding.

LLM Enhancement – The LLM is trained on the knowledge graph to reason about connections and provide more insightful answers.

-

Conversational Interface Design

Natural Language Understanding (NLU) – The interface uses NLU techniques to understand user queries in natural language,facilitating intuitive interaction.

Contextual Response Generation – The LLM leverages its knowledge base, and optionally the knowledge graph, to provide relevant and targeted responses that consider the user’s role and the relationships between concepts.

-

Continuous Learning

User Feedback – Users can provide feedback on the assistant’s responses, further refining its understanding and accuracy.

Ongoing Content Integration – New knowledge sources are continuously integrated to maintain the system’s relevancy.

Use Cases

Accelerating Knowledge Across Your Organization

-

Onboarding New Hires

Sales Representatives – The assistant provides on-demand access to product demos, competitor analyses, and successful sales playbooks, reducing reliance on senior reps for basic knowledge acquisition.

Software Developers – Offers quick access to code examples, API documentation, and troubleshooting guides relevant to the company’s codebase, accelerating developer onboarding.

Customer Support Specialists – Equips new agents with a comprehensive understanding of common customer issues, product knowledge, and internal support workflows.

-

Data Pre-processing for LLM Optimization

Cleaning and Normalization – We ensure data consistency, remove redundancies, and structure the data in a format optimized for LLM training.

Transformation and Enrichment – Raw data may be transformed, and metadata extracted to enhance the LLM’s understanding of your organization’s specific context.

-

Empowering Presales Teams

Presales consultants can instantly access relevant case studies, past solution blueprints, and client testimonials tailored to specific prospect requirements, significantly improving proposal development and win rates.

-

Facilitating Cross-Team Collaboration

Marketing & Sales Alignment – Enables both teams to access and share customer insights, market trends, and competitor data for more cohesive marketing messaging and sales strategies.

Engineering & Operations Collaboration – Bridges the knowledge gap between developers and IT operations by providing a central repository for deployment procedures, infrastructure configurations, and troubleshooting best practices.

Product Management & Customer Success – Empowers product managers to understand customer needs and pain points through real-time sentiment analysis of support conversations, fostering data-driven product development.

-

Preserving Institutional Knowledge

Capturing Expertise – Codify the knowledge of veteran employees by extracting insights from their emails, project documents, and internal communications, preserving their valuable expertise.

Facilitating Knowledge Sharing Between Geographically Dispersed Teams – Offers a central knowledge repository accessible to employees across global locations, reducing reliance on synchronous communication for knowledge transfer.ository for deployment procedures, infrastructure configurations, and troubleshooting best practices.

-

Standardizing Best Practices

Creates a readily accessible repository of documented procedures and successful workflows, ensuring consistent application of best practices across departments.