AI guardrails refer to a set of policies, frameworks and technical constraints that are put in place to ensure AI systems operate safely, ethically, legally and consistently with an organisation’s own standards. They enlightened us to think of them as safety rails for AI, which prevent it from driving off a ledge and produce harmful, biased or non-compliant results.

Key Objectives of AI Guardrails

- Stop Negative Outputs: The guardrails are placed to help prevent harmful or inappropriate content, such as offensive, fake, or toxic content. This ensures that AI will not generate outcomes that might harm individuals or society.

- Bias Mitigation: Guardrails are critical to identifying and addressing bias in AI models. By constantly adjusting AI outputs, they promote fairness and accessibility to prevent AI decisions from discriminating against certain groups.

- Protect Data Privacy: AI models often process sensitive data like Personally Identifiable Information (PII), health information (PHI), and financial information. Fault tolerances guard rails to guarantee the handling of such data in a secure way and as per privacy regulations like GDPR and HIPAA.

- Ensure Compliance Legal & Industry Standards: AI must meet legal, industry, and organizational standards. Guardrails impose these responsibilities to guarantee that AI systems comply with all relevant laws, without which legal and financial risks will be incurred.

- Foster Trust: By demonstrating transparency and accountability in AI development, guardrails help to strengthen public and stakeholder trust. “If people know that AI is used in ethical and legally responsible ways, they are more likely to want to use it.

Types of AI Guardrails

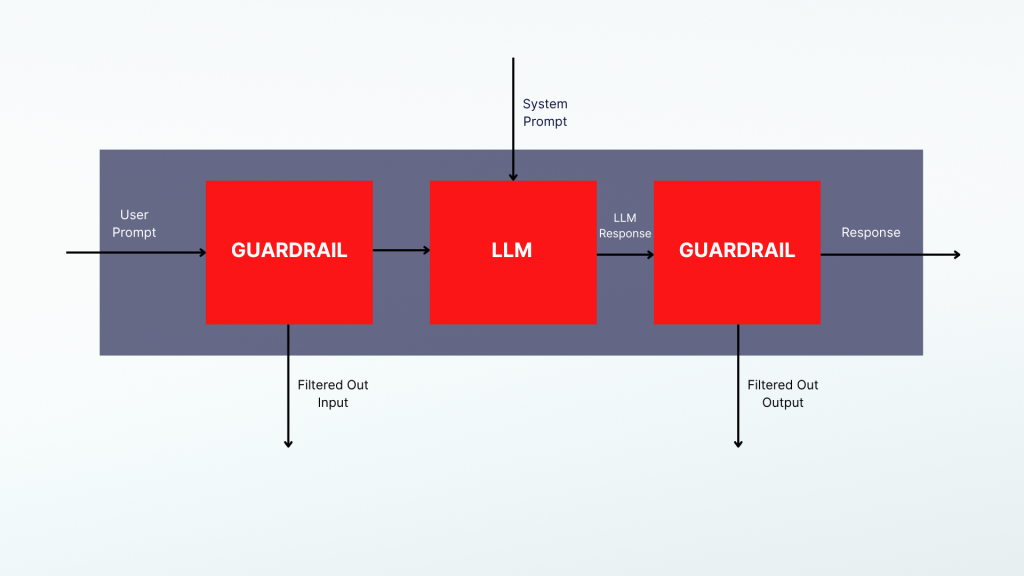

How AI Guardrails Work

AI guardrails typically function through a combination of:

- Policy Setting: Defining ethical standards, acceptable use cases, and data handling procedures.

- Technical Controls: Embedding rules into AI models to restrict certain behaviors or outputs.

- Monitoring & Auditing: Continuously reviewing AI outputs for compliance, bias, or errors, with real-time intervention if issues are detected.

- Correction Mechanisms: Automatically or manually correcting flagged outputs before they reach users.

- Logging & Reporting: Maintaining detailed logs for transparency, accountability, and regulatory review.

Why Are AI Guardrails Important?

- Ethical Guarantee: AI will not perpetuate socially harmful stereotypes nor make morally dubious decisions.

- Legal Security: Help companies to prevent regulatory sanctions and legal risks.

- Operational Safety: Decreases the likelihood of AI failure/mistakes, data leakage/breach/reputation risk.

- Enable innovation: Empower businesses to deploy AI at scale safely and securely.

How Focaloid Can Help

From enabling a secure, safe move to carry on the business in a different location using secure enterprise solutions for enterprise social network, Focaloid helps institutions scale and successfully adapt AI technology. Focaloid, with its vast knowledge in generative AI and machine learning, combined with cloud engineering, bring together experimentation and on-ground nano-IOT. Our methodology stresses practical, scalable AI integration into core products and processes anchored by robust governance and smooth orchestration with demonstrable performance.

- Safe deployment of AI and GenAI: Focaloid focuses on the responsible and secure implementation of its AI and GenAI technologies. It includes:

- Full-stack AI Integration: Architecting, integrating and scaling AI capabilities like predictive modelling, copilots and reconstruction-augmented generation (RAG) systems.

- Risk Management: Adopting a complete safety net, managing risks, building confidence for users and ensuring absolute compliance with industry standards.

- Governance and Compliance: Disseminate strong governance frameworks and technical controls to help ensure the responsible and secure use of AI.

- Faster Adoption Accelerators: Delivering working solutions and accelerators that shorten the time it takes to implement AI, and ensure safety and compliance.

By concentrating upon these pillars, Focaloid empowers businesses to move past the PoC stage and realize tangible outcomes while maintaining AI innovation.

In summary, AI guardrails are necessary to keep AI systems on track with responsible, safe, and values-based (both societal and organizational) guidelines. They offer an organized means of addressing the risks and issues associated with increasingly sophisticated AI systems.